Yesterday, I gave a talk at the American Association of Physics Teachers’ Summer Meeting about assessment practices that combine rigour, accessibility, and sustainbility to transform relationships to power. I tracked questions using a web form as well as providing slips of paper people could write questions on; as a result, the workshop’s “Curiosity Tracking Spreadsheet” is now available. This itself is one of the practices I was presenting about.

So, for those who had questions that we didn’t have time to answer, and anyone else who’s curious, here’s what the wonderful participants in that session contributed.

Overall

What does a typical class day look like?

We have a few typical class days.

1: Research/Measurement

Students are finding information and assessing its ability to answer their questions. Some are in the shop designing experiments and measuring things, some are reading the textbook, some are watching youtube videos, some are documenting what they’ve found using the Rubric for Assessing Evidence (see below), in some format that they can give to me. I review it with the to make sure it’s ready to share with the class.

2: Students Discuss Data in Groups

Students get into groups and review the evidence that’s come it this week. This might mean that I make a class set of photocopies of a student’s measurement data, or emailing everyone the link to a screencast that a student made, or etc. I probably batch up the evidence by topic (this group gets three people’s work that relates primarily to resistance; this group gets four people’s work that relates primarily to charge; etc.)

The students review the evidence against the rubric and decide what, if anything, they can conclude that is backed up by at least two people’s data. They, again, use the criteria on the Rubric for Assessing Evidence (it has to be clear enough that they can give two representations; it has to not have major contradictions with other things we’ve learned). The group whiteboards their proposal.

3: Students (or sometimes the Instructor) Present to the Whole Class

The rest of the class checks to make sure that the proposals meet the requirements of the Rubric for Assessing Evidence. They make suggestions to strengthen and clarify the proposal. We check for consensus on whether to add it to the class model. Along the way, new questions get generated, added to the Curiosity-Tracking Spreadsheet, and the cycle continues.

4: Direct Instruction

I present something, usually a measurement technique, sometimes a mathematical technique, that I think is needed for the investigations that students are working on. Often students bring lab equipment to class for practice, or spend class time solving problems.

Do you see this as a humanities/liberal arts approach?

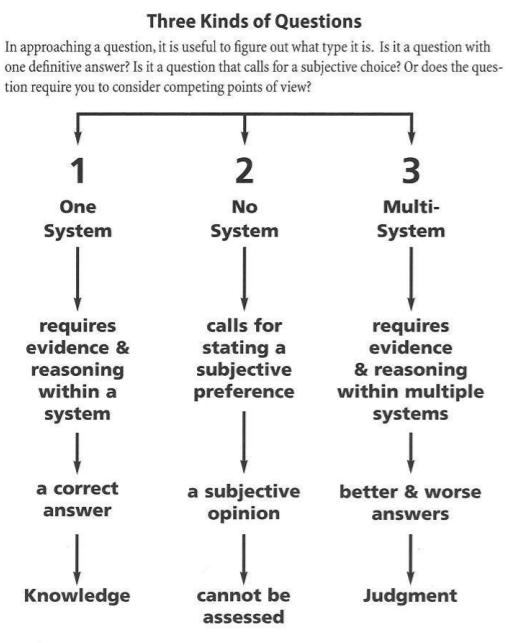

I see it as a critical thinking approach, but within a very specific meaning of critical thinking. I use the Foundation for Critical Thinking’s Miniature Guide to Critical Thinking Concepts and Tools a lot. They expand beyond the fact/opinion binary in this way:

In this model, facts are only “true” or “false” within a system. Students will often argue along the lines that it is “objectively true” that they are sitting on their chair. Which is true, in the system of every day thinking. In the system of subatomic particles, the electrons in their pants are repelling the electrons in the chair, and nothing ever touches anything else. Breaking out the binary model allows for “judgment calls”, which can’t be right or wrong; they are better or worse, depending on how well they meet the criteria you chose. This helps us break out of the “well you can’t say I’m wrong because that’s just my opinion” black hole.

Standards-Based Grading and Reassessment

(for background, here are some details on my SBG implementation)

You say skills testing can be repeated without penalty and any number of times, which is great (recognition that everyone learns at different paces) but, in a course, there is an absolute cut-off. How do you square that?

This is a situation where the best I can do is tell students what limitations I don’t have the power to change. At the end of the semester, if they haven’t mastered the required skills, they don’t get a passing grade. I have lots of techniques for pushing back against this; I can, for example, give a grade of “Incomplete”. My institution allows for something called a “learning contract”, as well as a “supplemental evaluation”, which can be completed up to 2 weeks after the end of the semester. I will often do this.

Of course, it connects with a more broad-based student advising strategy; if students are falling behind, it’s not a surprise at the end of the semester; we will have talked about it and tried to figure out what they need (part-time study? tutoring? financial assistance? food?) well before then.

Most of the time, the distribution here is quite bimodal. Most students complete the required elements within 15 weeks; those who don’t are very far behind and have been since early in the semester, often for larger reasons (they realize they don’t actually want to be in this field, they don’t have the supports they need to be in school, etc.). I’m not in a position where people fail the course by a few points. They either pass, or they have a grade in the single digits.

But yes, ultimately, the end of the semester is a hard limit. I do wonder what it would be like if the school allowed for something like “rolling” enrolment, where students worked on things until they mastered them, and then moved on to whatever builds on those skills? It would reduce the cohesion of the cohort, which would make it harder to engage in collective strategies. Not impossible, I bet… does anyone know of a post-secondary program doing something like this?

Do you have a reassessment crunch at the end of the term?

A little, but it’s manageable, and much less than it used to be. I require reassessments to be attempted within three weeks. If a student attempts reassessment and doesn’t succeed, the clock starts again at three weeks. So it’s not possible to save up every assessment until the end of the year. Also, later work in the semester naturally ends up demonstrating earlier skills, so sometimes I talk to a student and say, “this work you did on parallel circuits also demonstrates your knowledge of ohm’s law, so I’ve accepted it as a demonstration of both.”

In the context of SBG, how does the grading process account for students who demonstrate mastery of a skill during one assignment but then fail to demonstrate mastery of that skill during a later assignment?

This one is tough. If I was willing to sacrifice everything else on the altar of rigour, I would change the gradebook to show that the student’s previous mastery seems to need work. But I don’t. The downsides on class morale and confidence, not to mention my own stamina, are too much. I might talk to a student about it, remind them to look back at their previous work, and hope that, in resubmitting their work, they do the practice they need to strengthen that older skill. I keep note of it in my mind. But I never let grades go down.

Assessing Evidence

(I discussed a rubric for assessing the quality of evidence, using critical thinking skills, that the students use.)

I’m confused about what this looks like in practice. When do the students use the rubrics?

One way we use it is when I assign it for homework.

I could, for example, assign a reading on the limitations of op-amp performance. Often, I assign a section of the textbook and the corresponding wikipedia article. The students read the two sources and and turn in a completed rubric. I grade them for completeness, not correctness. For example, the rubric asks students to identify what they didn’t understand in the reading. If they correctly identify what they don’t know, then the assignment is complete. It gives them a reason to read closely, and it gives me a list of concepts students need more information on.

At the bottom of the rubric, the student must indicate whether they think this information is ready to be accepted. If they don’t understand it, can’t express it in a different representation, or it seems to conflict with what they’ve seen in or outside of school, then the correct conclusion is “no, this is not ready to be accepted”. The questions they raise go into our tracking spreadsheet, and we resolve the apparent contradictions before coming back to it.

As long as the student decides to accept or not accept in a way that’s coherent with the rest of what they wrote, then the assignment is “complete.” “Assess Evidence” is a standard in each topic unit, so that’s where the grade goes.

The students also use the same rubric in “real time” during student presentations. A group might present some evidence they found, proposing that we accept a new idea into the model. The rest of the class is responsible for working through the rubric, making sure they understand what the presenters are saying. At the end of the presentation, the whole class decides together whether to accept the proposal, using the rubric to bring up questions.

Finally, the students use the rubric when they’re doing research on their own. Before they submit research for consideration by the class, they have to convince themselves that the evidence they’ve found at least appears understandable and coherent to them.

Emergent Curriculum

(I let student curiosity drive the curriculum; the topics they have questions about, as long as they are related to the course outcomes in some way, go into a curiosity-tracking spreadsheet, and become our lesson plans)

What do the other columns mean in the curiosity-tracking spreadsheet?

I tag each question by topic; for a circuit analysis course, those columns indicate that the associated question is likely to lead students to learn about voltage, resistance, current, power, etc. This allows me to group students for discussion with others who are investigating similar topics, or vet the list to prioritize one topic before another, etc.

What strategies do you have to prompt the questions used for curiosity tracking?

I don’t find that I need to do a lot of prompting. At the beginning of the year, I give a few goal-less tasks: what can you do with this conductive play-dough? What can you do with these small incandescent bulbs and AA batteries? I give students a page where they can draw, write observations, and note questions. I circulate and listen to the question students ask each other. I take note of the questions students ask me. They all go in the tracking spreadsheet.

I encourage questions by accepting them as “answers”, especially on the rubric for assessing evidence. In every section, students can submit either a statement or a question, and they are equally valid. Sometimes, if I think it’s a good fit for the student, I get hyper-excited and goofy when they ask questions, and make a point of giving specific praise for what is especially good about that question (is it especially precisely formulated? Does it inquire about causality of something that others were ignoring? Does it shed light on someone else’s idea? etc.).

In any given semester, we end up with way more questions than we could ever possibly tackle.

Does this reduce number of topics covered?

Overall it hasn’t. Because of the conjunctive grading, I control the “mandatory” skills, but that leaves 40% of the grade for “optional” skills. Students propose all kinds of things that aren’t technically in the curriculum but do relate to the learning outcomes. For example, neither our syllabus nor our textbook talk about quarks. But students often investigate them, and apply that work to increase their grade in the “Atomic Structure” unit. So for some students, it increases the topics covered.

Because that 40% of the grade doesn’t necessarily have to be done collectively, different students are investigating different things, and they may or may not present that data to the class. So besides allowing students to cover more topics together, if you look at the whole ensemble of all topics investigated by all students in the class, it can be a wide range.

But the required 60% is set by me and, while I accept any demonstration of mastery, there’s no getting around those skills. In a conventional class, I’d be teaching 100% of a set skill list, and some students would only be learning 60% of it anyway; so while it might reduce the number of skills “covered” by me, it doesn’t reduce the skills “covered” by the students. I see this as a win!

The link for the Foundation for Critical Thinking’s Miniature Guide to Critical Thinking Concepts and Tools doesn’t work for me. Is this: https://www.criticalthinking.org/files/Concepts_Tools.pdf the guide you are referring to?

Thanks Jenn, that link remarkably died between yesterday and now! Yes, you found the right thing. I updated the link in the post too.